The Fastest-Growing AI Standard Is Becoming Cybersecurity's Biggest Blind Spot

I just read this VentureBeat article on Anthropic's Model Context Protocol (MCP) - now the most rapidly adopted AI integration standard of 2025:

MCP stacks have a 92% exploit probability

I've been chatting with several AI builder teams lately, and the story's similar everywhere . Everyone's racing to get MCP integrations working and show results, while security is taking a back seat until "later." That "later" might be too late. This is creating a new class of agentic AI risks - uncontrolled plugin access, weak context validation, and missing governance across MCP servers.

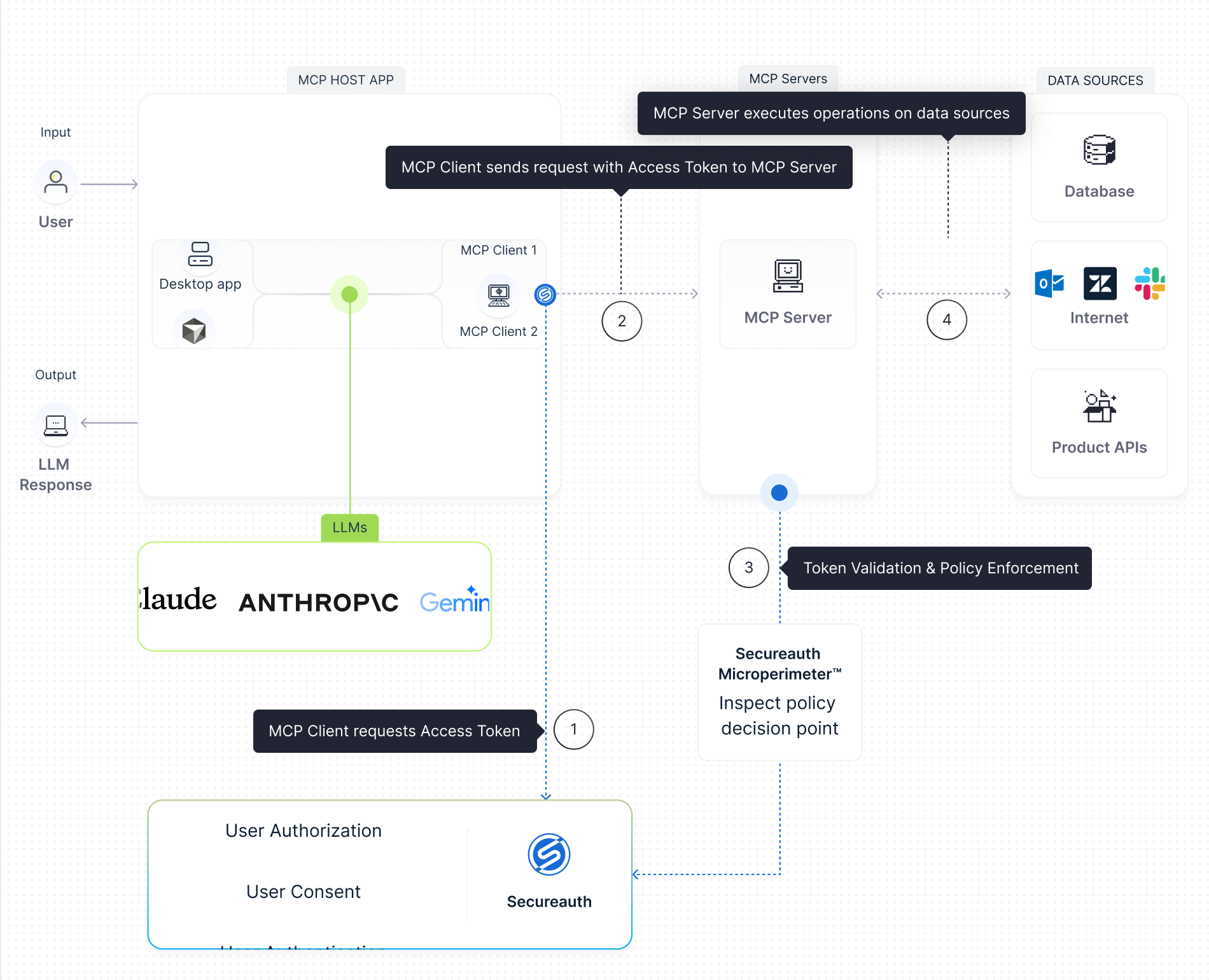

At SecureAuth, we believe this is where identity-first security becomes critical. Our foundation of policy-driven controls, open standards, delegated authentication, and organization-aware access positions us to serve as the MCP Security Control Plane - helping in-house agent builders and agentic SaaS companies build securely and deliver safer, centrally visible, and auditable Agent solutions, with:

- Authentication hardening (OAuth 2.1 / OIDC)

- Contextual authorization and policy binding

- Risk-adaptive, device-aware enforcement

- Centralized registration and visibility across MCP servers

- Machine identity lifecycle governance

We are already teaming up with AI builders to bring these security controls directly into their ecosystems.

MCP Security Control Plane - SecureAuth

MCP Security Control Plane - SecureAuth

If you're experimenting with MCP within your enterprise or building a SaaS service ecosystem for agents or deploying agentic AI, I'd love to hear your perspective - what challenges are you running into? I'm also interested in what you're seeing out there, what's working and what's breaking? Your insights will help us refine and strengthen how we shape our offering for this evolving space. Let's connect to have a conversation.