Risk analyzers

Risk analyzers power the Risk Engine by evaluating key security factors such as user identity, device trust, and environmental risks. These analyzers calculate a Level of Assurance (LOA) score, helping ensure secure and adaptive authentication decisions.

Device risk

What it does

Verifies whether the user is logging in from a trusted device.

Applies device-specific authentication policies to reduce the risk of unauthorized access.

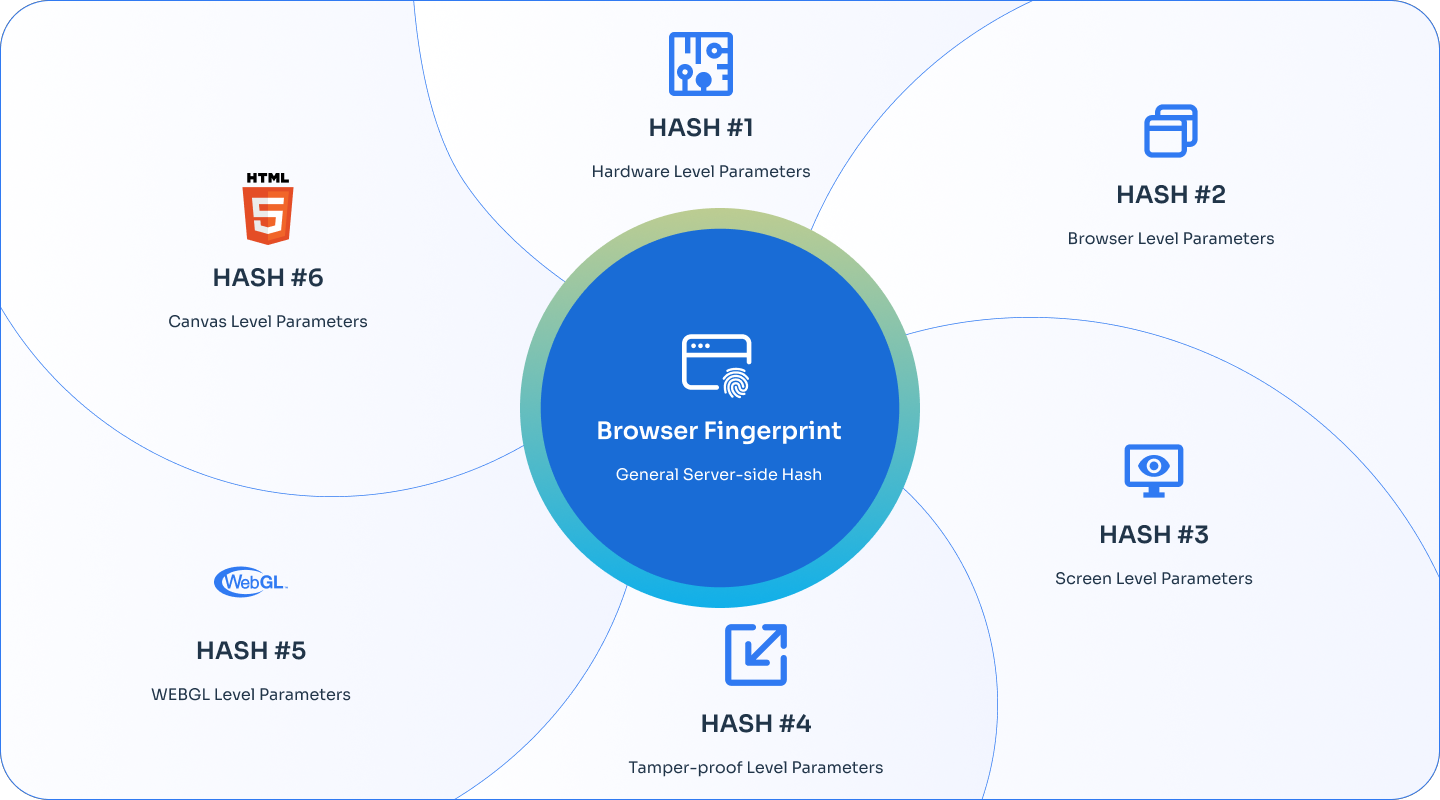

Device Browser Fingerprint (DBFP) analyzer

Device Browser Fingerprint (DBFP) is a key tool for improving security. It collects over 30 unique details from a user’s device—like browser type, operating system, screen size, and plugins. These details create a unique "fingerprint" for each device.

The system uses these fingerprints to track how users typically log in. By spotting changes or unusual activity, DBFP can detect potential fraud, helping secure your accounts while keeping trusted users’ access seamless.

|

Browser Trust (BT) analyzer

Browser Trust (BT) uses AI and machine learning (AIML) to make authentication smarter and more adaptive. Instead of relying on rigid rules, BT dynamically analyzes user behavior, creating a personalized risk score for each login attempt.

What BT evaluates:

Login Patterns: Tracks typical login times and days.

Device and Browser Usage: Checks whether the device and browser match previous logins.

By learning a user’s behavior, BT gets more accurate over time, adjusting security levels based on real-time data.

|

John Doe’s device pattern example

John Doe uses multiple devices each day:

Morning: Phone

Afternoon: Work laptop

Evening: Personal laptop

The Risk Engine assigns each device a unique fingerprint. If John suddenly logs in from his work laptop in the middle of the night, the system flags it as unusual and may require extra verification.

This approach balances security and convenience, protecting against threats while minimizing user friction.

Location and network risk

What it does

Identifies and flags suspicious access attempts from unrecognized or high-risk locations.

Detects malicious IPs, including those from VPNs, Tor, and proxies.

Geo-IP analyzer

The Geo-IP analyzer assesses risk based on the number of successful logins from a specific IP and how many other users share that IP. It calculates the score using these factors:

New IP – An unknown IP poses a higher risk.

Confirmed & Not Exclusive – The risk depends on how many other users share the IP and the number of successful logins by this user.

Confirmed & Exclusive – A lower risk if only this user logs in from the IP, with the score reflecting the number of successful logins.

IP Reputation – The IP Reputation feature identifies high-risk factors such as Tor, VPNs, proxies, and bot activity. If detected, these factors can lower the Geo-IP Analyzer score. Depending on admin settings, the system can apply a cutoff scenario, reducing the LOA score for the corresponding domain or even bringing the overall LOA score to 0.

Impossible Travel – The Impossible Travel feature flags login attempts from geographically distant locations within an unlikely timeframe. If a user logs in from two locations too far apart in a short period, the Geo-IP Analyzer score decreases. Admin settings can trigger a cutoff in these cases, reducing the LOA score for the Network/Location domain and potentially lowering the overall LOA score to 0.

Trusted IP analyzer (AIML model)

This model learns a user's typical IP usage patterns based on the time of day and the day of the week. It identifies when and where a user usually logs in and flags unusual activity.

IP – Tracks the user's regular IP addresses.

Time of Day – Monitors typical login times (e.g., during work hours).

Day of the Week – Identifies whether the user logs in more often on weekdays or weekends.

Example

If a user usually logs in from their office IP during work hours (9 AM – 6 PM, Monday to Friday) but suddenly logs in at 2 AM on a Saturday from a different IP, the model flags it as suspicious.

This model continuously learns a user's normal login behavior and alerts on deviations.

Trusted Location analyzer (AIML model)

The Location Trust Model predicts the likelihood of a user being in a specific location at a given time. It analyzes typical patterns based on time of day, day of the week, and location to determine whether the current login behavior is normal or suspicious.

Example

If a user typically logs in from home in the evening but suddenly attempts to log in from a different city at 3 AM, the model flags it as suspicious based on location-time patterns.

Behavioral risk

What it does

Continuously monitor user behavior to detect deviations from normal patterns.

Adjust authentication requirements based on behavioral risk scores to providie a seamless and secure user experience.

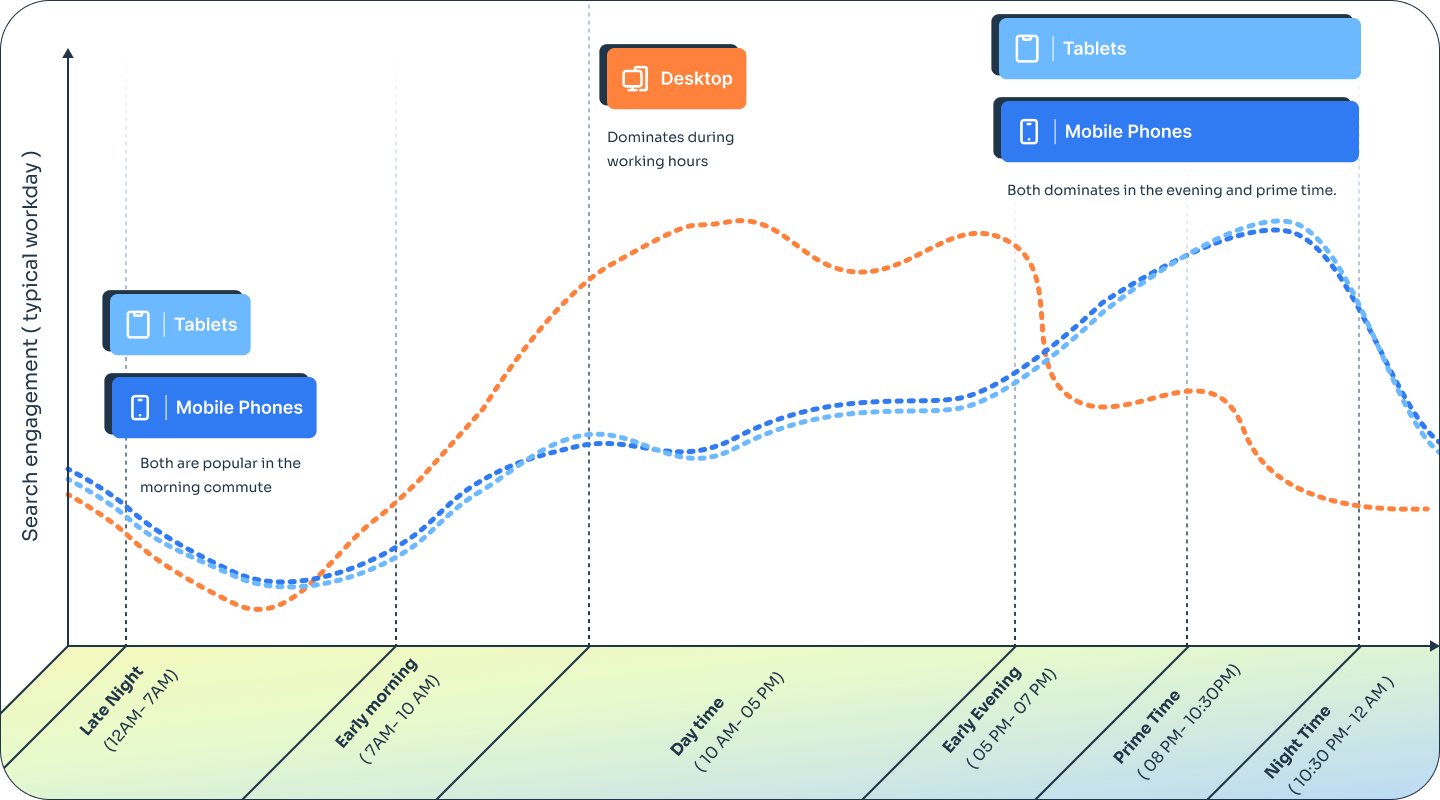

The Behavioral Risk model uses time-based anomaly detection detection to identify unusual behavior based on historical time patterns. It dynamically learns from past activity to determine whether a login time is abnormal for an individual user or a group.

User-based time anomaly detection analyzer (AIML)

This model tracks when a user logs into different applications across various days and times.

Key functions include:

Establishing a baseline for each user's login behavior based on historical data.

Assigning an Application Trust Risk Score, ranging from 0 to 1 for each login.

Example

User: John Doe – Senior Data Scientist

Work routine: Logs into work applications between 9 AM – 5 PM EST, Monday to Friday

Application usage: Uses Slack, Jira, and Microsoft 365 during work hours

Login frequency: Logs in 3–4 times a day, during morning and afternoon work sessions

Date | Application | Login time | Application trust score | Interpretation |

|---|---|---|---|---|

Jan 1 | Slack | 10:00 AM | 0.98 | Normal behavior |

Jan 15 | Jira | 2:00 PM | 0.92 | Slightly different but still normal |

Jan 20 | Microsoft 365 | 2:30 AM | 0.12 | Highly anomalous, unusual login time |

On Jan 1 and 15, John logs in during his usual work hours, and the model assigns a high trust score close to 1, indicating normal behavior.

On Jan 20, he logs in at 2:30 AM, an unusual time for him. The model assigns a low trust score of 0.12, indicating anomalous behavior.

Group-based time anomaly detection analyzer (AIML)

This model analyzes login patterns for users with shared characteristics, such as working in the same organization, timezone, or application environment.

Key functions include:

Establishing a baseline for when the group typically logs in.

Assigning a KTG (Known Time Group) Score ranging from 0 to 1.

Example

A security team in a global organization, all located in North America.

Date | User | Location | Login time | Group's usual time | KTG score | Interpretation |

|---|---|---|---|---|---|---|

Jan 1 | Bob | New York | 10:00 AM | 9 AM - 7 PM EST | 0.95 | Normal behavior |

Jan 5 | Jack | Toronto | 6:30 PM | 9 AM - 7 PM EST | 0.89 | Normal behavior |

Jan 12 | Charlie | London | 3:00 AM | 9 AM - 7 PM EST | 0.08 | Highly anomalous login time |

On Jan 1 and 5, Bob and Jack log in during typical group hours, and the model assigns a high KTG score, close to 1, indicating normal behavior.

On Jan 12, Charlie logs in at 3:00 AM London time, an unusual time for his North American-based team. The model assigns a low KTG score of 0.08, flagging it as anomalous.

Even if Charlie has no personal history of login attempts, the model detects unusual activity by comparing his behavior to the group’s baseline, allowing for early detection of suspicious logins.